To dig even deeper into deep learning, please have a look at the technical report I wrote on my findings (PDF document).

I have had the pleasure of diving into the deep waters of deep learning and learned to swim around.

Deep learning is a topic in the field of artificial intelligence (AI) and is a relatively new research area although based on the popular artificial neural networks that supposedly mirror brain function. With the development of the perceptron in the 1950s and 1960s by Frank RosenBlatt, research began on artificial neural networks. To further mimic the architectural depth of the brain, researchers wanted to train a deep multi-layer neural network – this, however, did not happen until Geoffrey Hinton in 2006 introduced Deep Belief Networks.

Recently, the topic of deep learning has gained public interest. Large web companies such as Google and Facebook have a focused research on AI and an ever increasing amount of compute power, which has led to researchers finally being able to produce results that are of interest to the general public. In July 2012 Google trained a deep learning network on YouTube videos with the remarkable result that the network learned to recognize humans as well as cats, and in January this year Google successfully used deep learning on Street View images to automatically recognize house numbers with an accuracy comparable to that of a human operator. In March this year Facebook announced their DeepFace algorithm that is able to match faces in photos with Facebook users almost as accurately as a human can do.

To get some hands-on experience I set up a Deep Belief Network using the python library Theano and by showing it examples of human faces, I managed to teach it the features such that it could generate new and previously unseen samples of human faces.

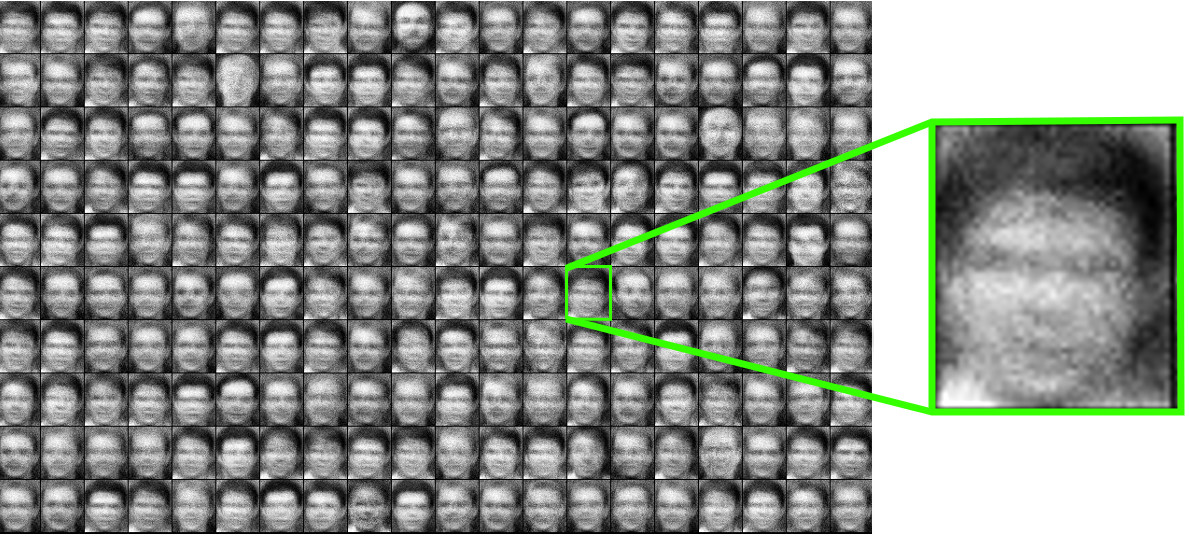

The ORL Database of Faces contains 400 images of the following kind:

By training with these images, the Deep Belief Network generated these examples of what it believes a face to look like

The variation in the head position and facial expressions of the dataset makes the sampled faces a bit blurry, so I wanted to try out a more uniform dataset.

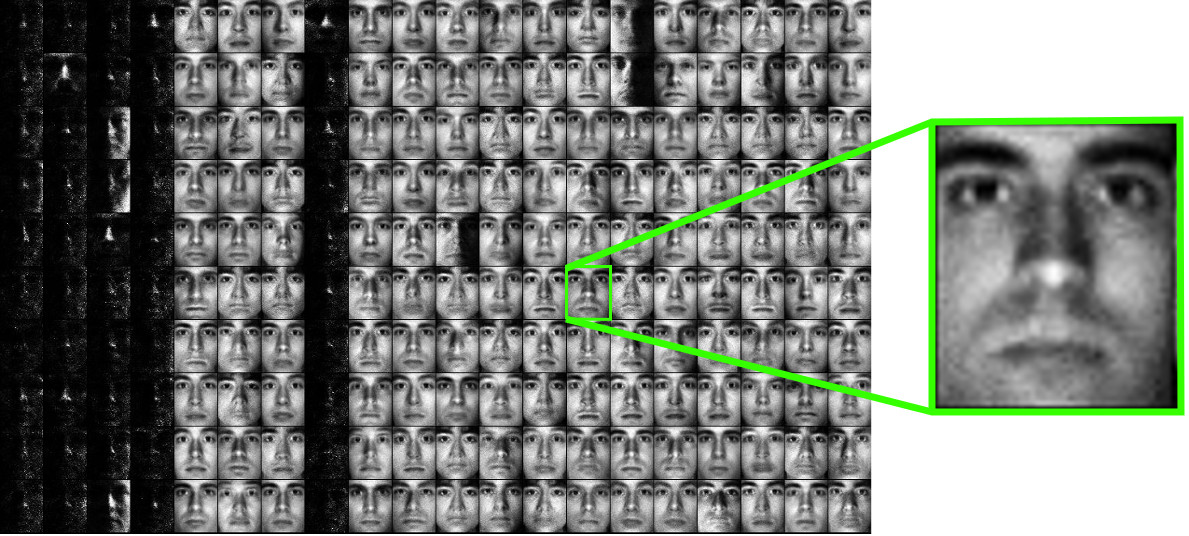

The Extended Yale Face Database B consists of images like the following

and in the cropped version we have 2414 images that are uniformly cropped to just include the faces.

Training the Deep Belief Network with this dataset, it generated these never before seen images that actually look like human faces. In other words; these images are entirely computer generated, as a result of the Deep Learning algorithm. Based only on the input images the algorithm has learned how to “draw” the human faces below: